Product Placement

Objective

To design an advertisement detection- and integration- system for multimedia videos, useful for next-generation online publicity.

Team

This is a collaborative work between:

- The ADAPT Centre, Trinity College Dublin

- Huawei Ireland Research Center, Dublin.

Description

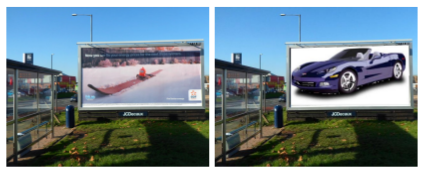

With the rapid development of internet services, there has been a massive surge in the development of online multimedia videos. These videos are equipped with skip-ad buttons and ad blockers, bringing online marketing and advertisement to a standstill. In this project, we solve this problem by seamlessly integrating new adverts into existing adverts in videos. We design a system that can localize an existing advert in an image frame (from the video sequence), and replace it with a new target advert.

(From left to right) Original image, Augmented image with new target advert.

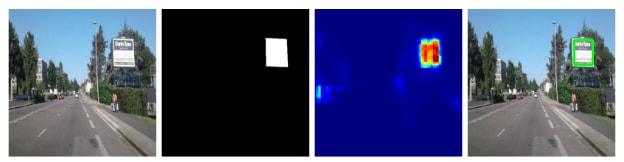

Traditionally, the frames in a video are manually checked by the video-editors, for possible candidates in new advert integration. This is obviously cumbersome and time-consuming. In this project, we propose a deep-learning module called ADNet that automatically detects if a video frame contains an existing advert. Furthermore, we also propose a light-weight convolutional neural network called DeepAds, that can localize the position of the advertisement in the video frame with a high degree of accuracy. The DeepAds model is based on a probabilistic billboard detection framework, that assigns a degree of probability to each pixel in a video frame to belong to billboard category. In this context, we collected and annotated a large-scale dataset of outdoor scenes (referred as ALOS dataset) with manually annotated ground-truth maps. Finally, the bouding box is computed using a deep-learning based refinement network.

(From left to right) Input image, Binary ground-truth map, Detected advert, Localized advert.

We also explored the idea of identifying candidate spaces in a video frame, wherein new adverts can be artificially implanted. This technology assists the advertisement agencies to generate personalised video content, based on consumers’ likes and dislikes. We proposed and released the first large-scale dataset (referred as CASE dataset) of candidate placements in outdoor scene images. Our proposed light-weight DeepAds neural model is also effective in identifying new candidates spaces in video frames.

The candidate spaces (marked in pink) can either be floating across the scene, or anchored against walls in the scene.

Demonstration

Results

Please refer to the publications. This work has received extensive media coverage: [tcd] [dcu] [irishtimes] [isin] [adaptcentre] [engineersjournal] [adaptcentre] [technology-ireland] [ibec] [techcentral].